Text-to-SQL with Indirect Supervision

Generating SQL queries from natural language is a challenging task, especially when users lack technical expertise. Traditional approaches to this problem have relied heavily on annotated datasets containing both natural language questions and their corresponding SQL queries. However, collecting and annotating these datasets is labor-intensive and requires in-depth knowledge of both SQL and the structure of the underlying databases.

Our Approach

To overcome these challenges, we propose a new learning paradigm that uses indirect supervision through answers rather than SQL queries. This method takes advantage of the vast amount of question-answer pairs readily available on the internet and significantly reduces the cost of dataset creation.

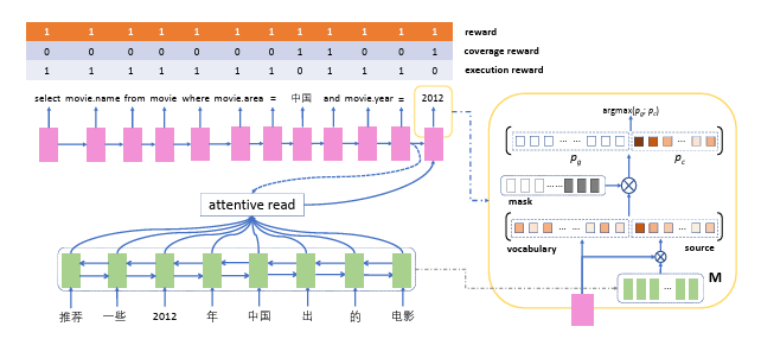

The model we developed, called SQLGen, integrates reinforcement learning with an end-to-end neural network architecture. It leverages the COPYNET framework, which utilizes a copying mechanism to extract relevant information from the input questions. SQLGen learns to generate SQL queries that retrieve the correct answers from a database, guided by two key types of rewards: coverage reward and execution reward. These rewards help to refine the model's ability to generate correct SQL queries and ensure that they retrieve the correct results when executed.

Key Innovations

- Indirect Supervision: Unlike previous models that rely on directly annotated SQL queries, SQLGen learns from question-answer pairs, making it easier to gather training data.

- Reinforcement Learning: By using a policy-based reinforcement learning approach, SQLGen continuously improves its query generation policy based on feedback from the generated SQL's execution results.

- Compound Reward Mechanism: We introduced a compound reward mechanism that provides fine-grained supervision at the token level in the generated SQL query. This helps SQLGen learn both the logic of the SQL structure and the correctness of the answers it retrieves.

Results

SQLGen outperforms traditional baseline models on multiple datasets, including movie-related questions and academic publication queries. The model shows significant improvements in accuracy when compared to previous approaches, especially when handling complex queries involving multiple conditions and tables.

Notably, SQLGen achieves a higher accuracy on queries that involve multiple conditions (e.g., filtering by both movie genre and release year) compared to those with single conditions. The reinforcement learning mechanism allows the model to explore a wide space of potential SQL queries, significantly improving its ability to generate the correct query.

Conclusion

Our research demonstrates the feasibility of generating accurate SQL queries from natural language questions without the need for direct SQL query annotations. SQLGen not only simplifies the task of creating structured queries but also provides a more efficient way to train models in environments with limited annotated data.

This work opens up new avenues for further advancements in NLP-driven database querying and will be pivotal for creating more intuitive systems that enable users to interact with databases using natural language.

For more details, you can access our full research paper: https://doi.org/10.1016/j.csl.2020.101185

@article{BAI2021101185,

author = {Ziwei Bai and Bo Yu and Bowen Wu and Zhuoran Wang and Baoxun Wang},

title = {Learning to generate structured queries from natural language with indirect supervision},

journal = {Computer Speech & Language},

volume = {67},

pages = {101185},

year = {2021}

}