AI Is Reshaping Academic Writing—And Journal Policies Are Powerless to Stop It

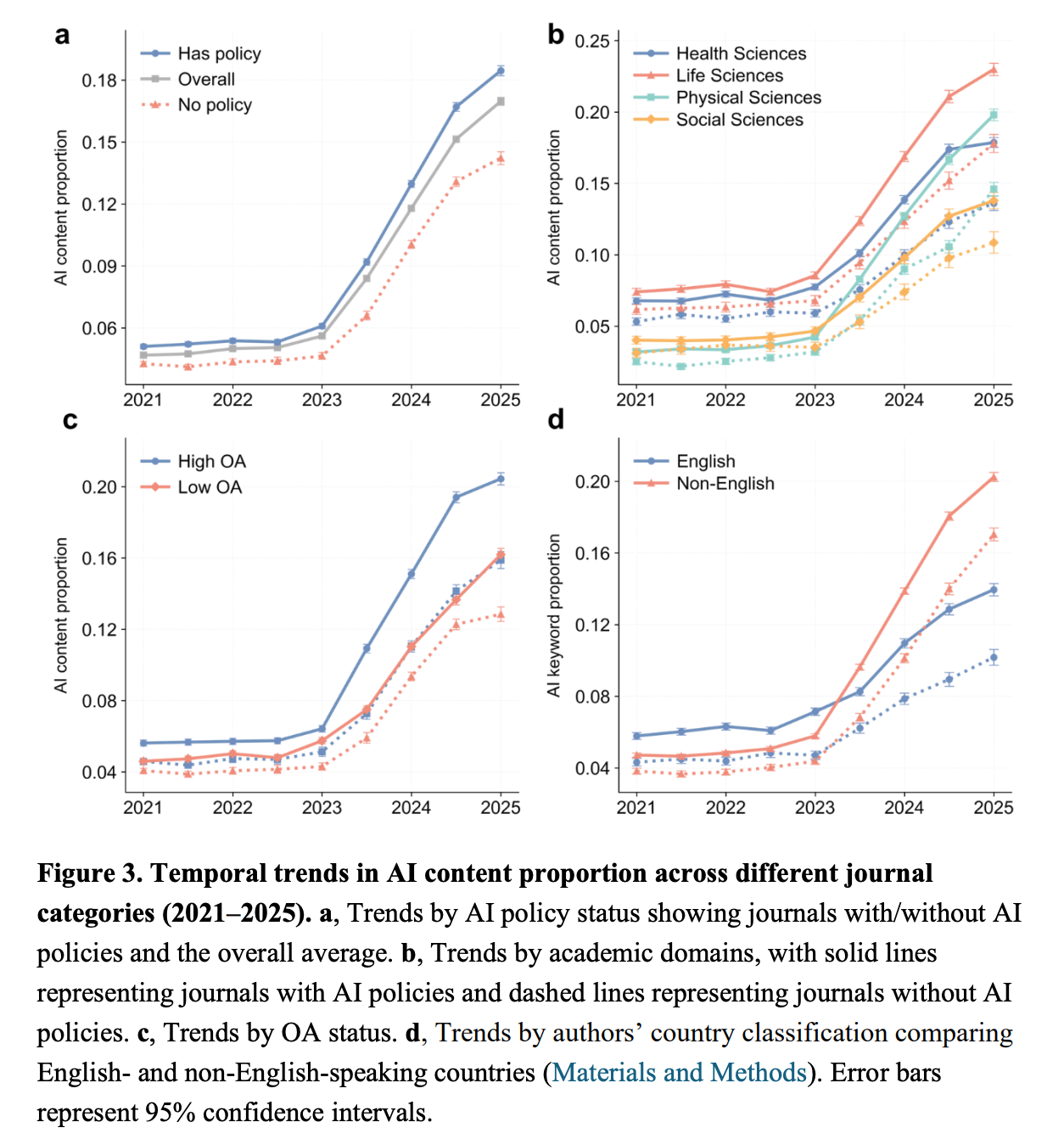

Over the past two years, academic publishers around the world have rolled out “AI usage policies” with impressive urgency: disclosure rules, authorship restrictions, bans on unchecked text generation, ethical guidelines, and more.

At first glance, it looks like scholarly communication has built a protective firewall against AI-generated writing.

But a new large-scale empirical study from Peking University reveals a far harsher truth:

These policies barely work. AI is penetrating academic writing at a speed no rule can contain.

And the academic world may already look very different from what we imagine.

01 | 70% of journals have AI policies—yet they are effectively meaningless

The researchers analyzed AI-related statements from 5,114 JCR Q1 journals and found:

- 70% explicitly published AI usage guidelines

- Most require some form of disclosure

- Strict prohibitions are extremely rare

On paper, the academic community appears to be taking AI seriously.

In practice?

These policies live on websites, not in writing behavior.

Authors simply don’t follow them—and journals have no mechanism to enforce them.

https://arxiv.org/pdf/2512.06705

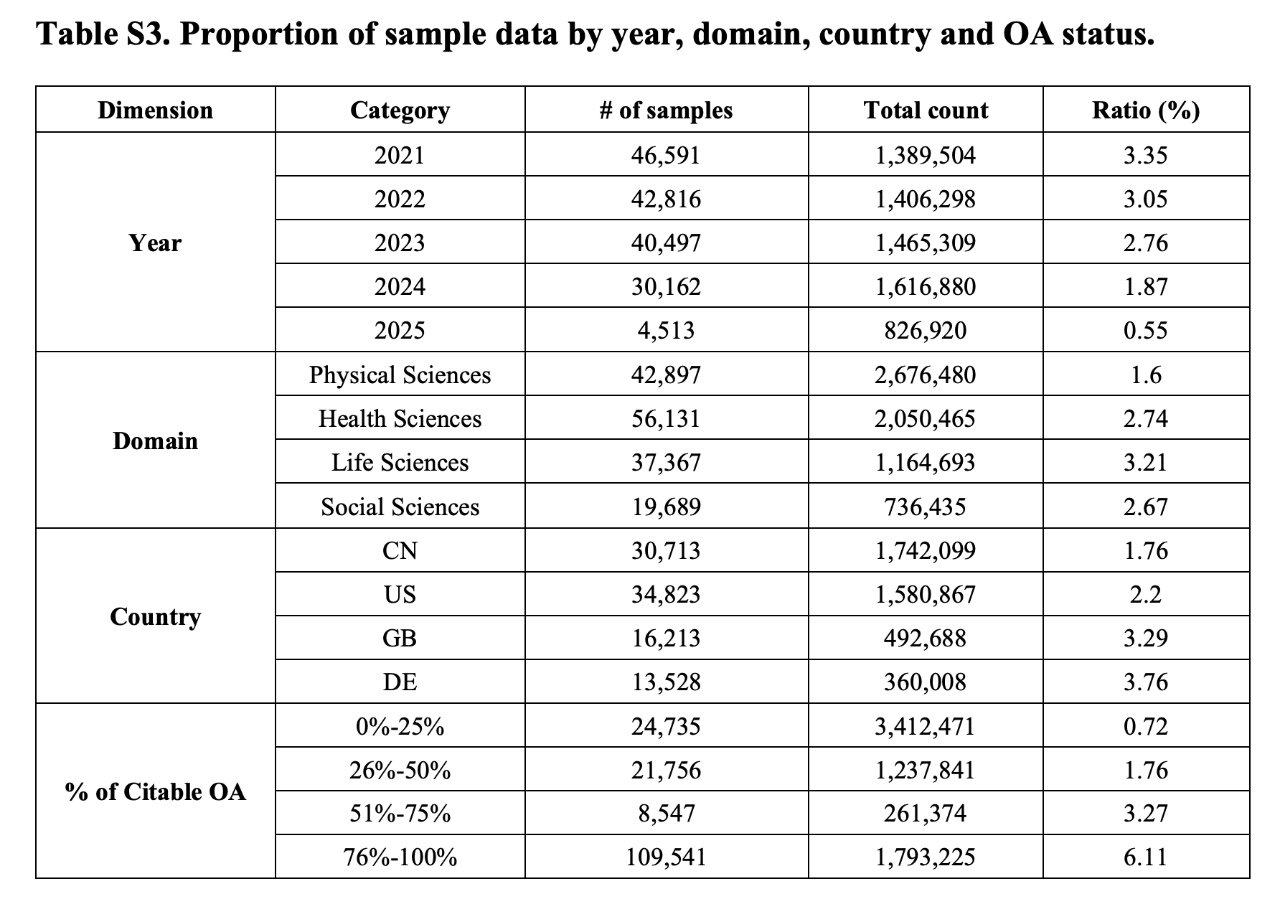

02 | 5.2 million papers reveal an explosive surge in AI writing since 2023

By examining 5.23 million abstracts using four independent AI-trace detection methods, the study uncovers a dramatic pattern:

- 2023 was the turning point—AI-assisted writing began skyrocketing

- 2024–2025 entered the “full saturation phase”

- The real question is no longer “Do authors use AI?” but

“How much AI do they use—and how extensively?”

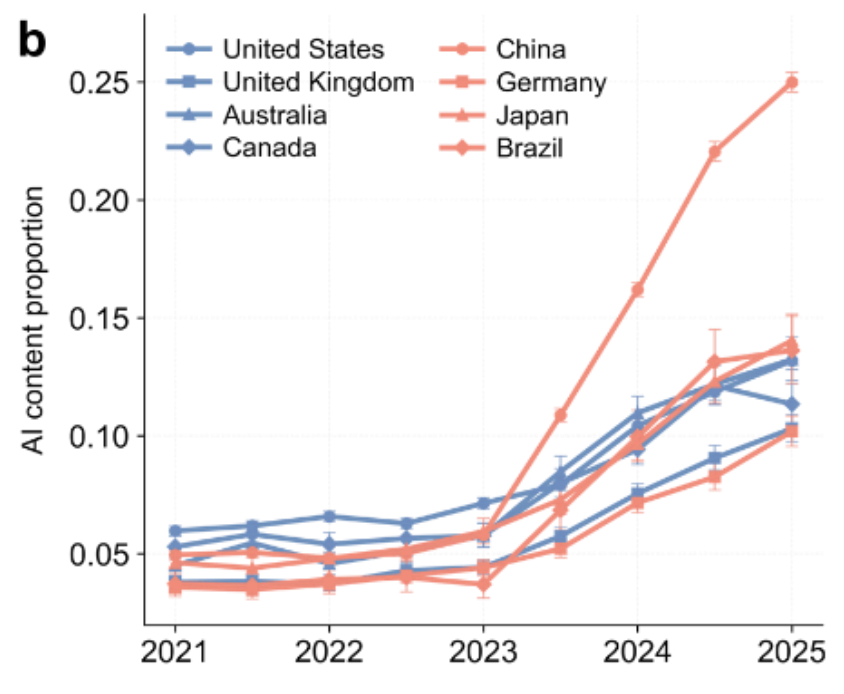

Most strikingly, the rise of AI writing shows no correlation with whether a journal has an AI policy.

Policies or no policies—researchers are using AI anyway.

03 | Journals with AI rules vs. without rules → nearly identical AI adoption curves

Across multiple statistical tests, the researchers found:

- The growth trajectory of AI writing is the same

- Disclosure rules make no measurable difference

- Prohibitions do not reduce usage

In other words:

Policy has no behavioral effect. The AI wave is unstoppable.

This points to a deeper crisis:

- Academic governance is lagging far behind practice

- Authors do not feel bound by AI guidelines

- Enforcement mechanisms are absent or nonfunctional

We may be moving toward a future where the real question becomes:

Is there any part of the paper still written by a human?

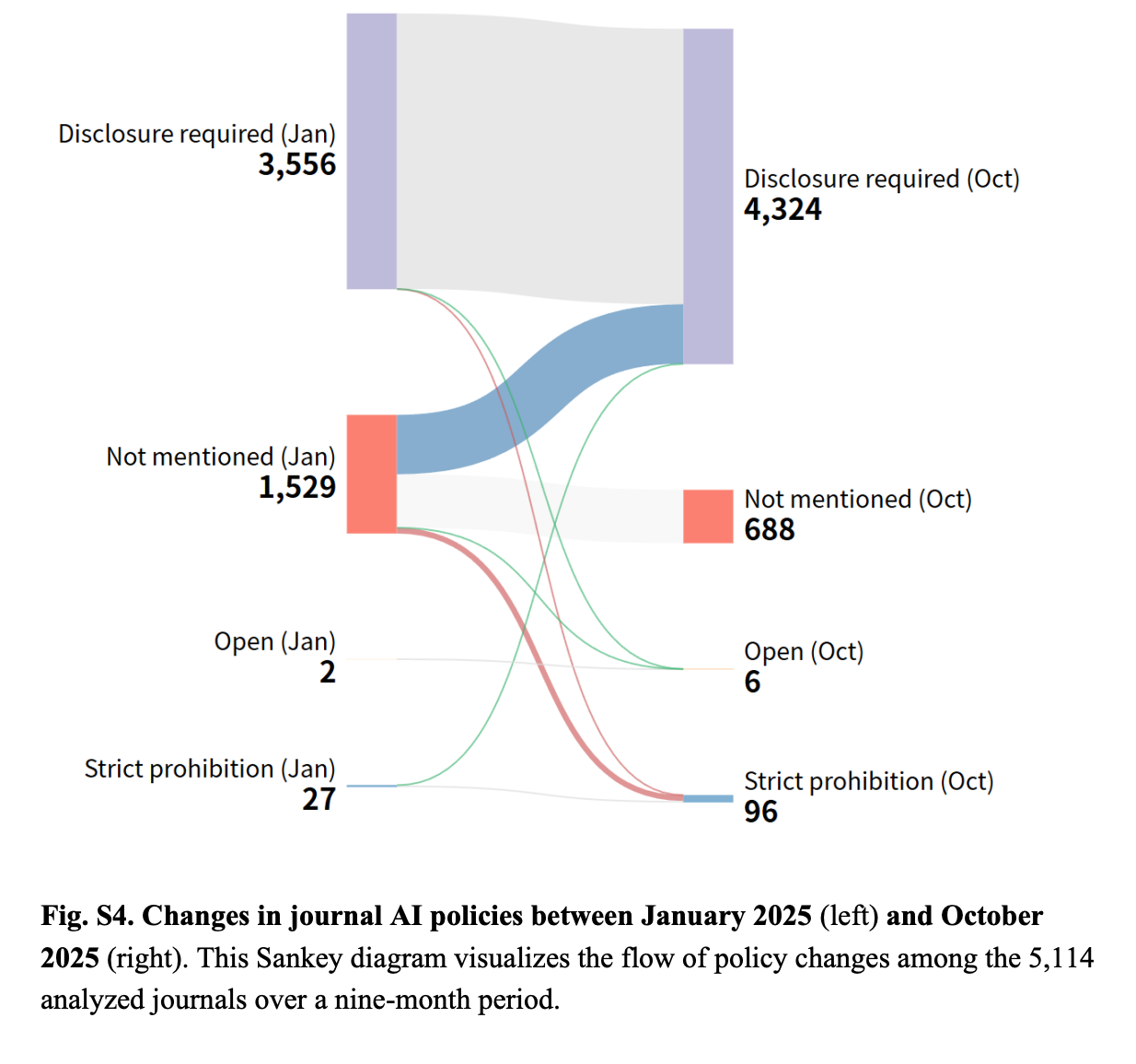

04 | Who is embracing AI the fastest? Three groups stand out

1. Non-English-speaking researchers

The fastest growth comes from:

- China

- Germany

- Japan

- Brazil

AI is rapidly becoming a global tool for linguistic equalization—but it also means:

Language will no longer be a gatekeeper of publication quality.

2. Physical sciences

Fields such as:

- Mathematics

- Computer Science

- Engineering

- Physics

These disciplines rely heavily on structured, formulaic writing—making them fertile ground for accelerated AI adoption.

3. High–open-access journals

The higher the OA rate, the stronger the AI signals.

Some OA journals may already be drifting toward a new model:

Fast publishing + large-scale AI writing.

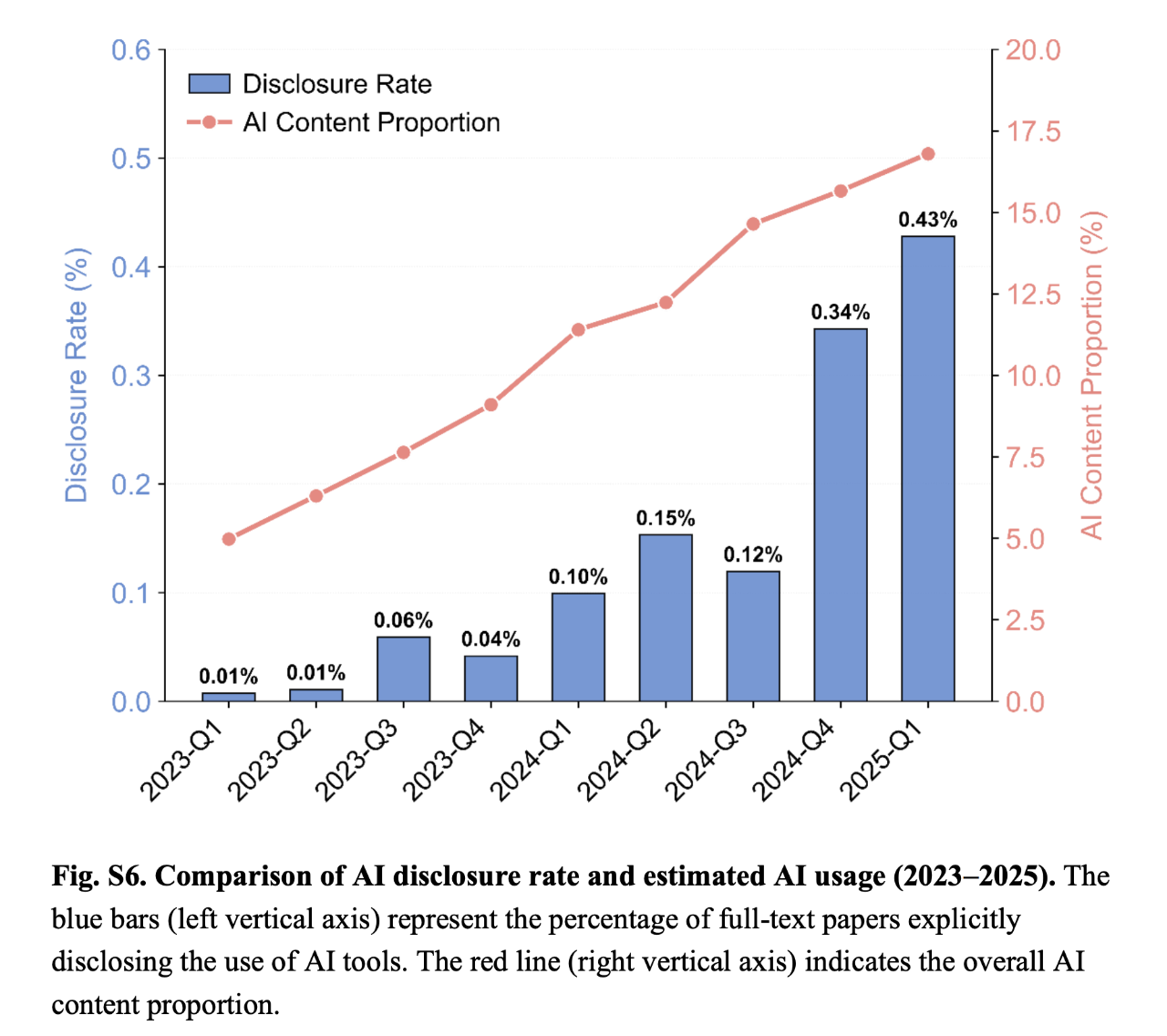

05 | The most shocking statistic: AI disclosure rate ≈ 0%

The researchers reviewed 164,579 full-text papers to identify explicit AI usage disclosures.

Among 75,172 papers (2023–2025):

Only 76 papers acknowledged the use of AI.

➡️ Disclosure rate: ~0.1%

At the same time, AI trace detection indicates:

- For every 40 papers that used AI,

only 1 openly admits it.

The gap is staggering.

Most researchers use AI.

Almost none are willing to say so.

Fear of rejection, fear of reputation damage, or simply the ambiguity of “acceptable use” may all contribute.

06 | A governance crisis is emerging in academia

The data paints a worrying picture:

- Policies are ineffective

- Disclosure systems have collapsed

- Detection tools are outdated and easily circumvented

- Ethical norms are being rewritten in real time

The authors argue that existing policies focus too narrowly on:

“Prohibit or disclose”

Instead of addressing the core question:

“How should researchers use AI responsibly and skillfully?”

Trying to manage AI writing with current rules is like using traffic lights to control a fleet of jet aircraft.

07 | What this research teaches us about the future

1. AI writing is irreversible

The debate is no longer “Should we use AI?”

The real challenge is: How do we use AI well?

2. Policies must evolve toward “responsible AI,” not rigid bans

Otherwise, academia risks:

- Blurred boundaries of research integrity

- Confusion over human vs. machine authorship

- A breakdown in peer-review assumptions

3. Global research competition will be reshaped

As language barriers shrink, traditional academic power structures may shift.

4. We are standing at the dawn of a transformation in scholarly publishing

The collapse of enforcement means academic writing is inevitably transitioning into a human–AI collaborative ecosystem.

Those who adapt early will define the new standards.

Conclusion: A silent revolution is already underway

This study delivers a sobering message:

AI has already redefined scientific writing—and the rules meant to govern it no longer work.

We are facing a historic turning point:

- The nature of academic writing

- The meaning of authorship

- The ethics of research

- The mechanisms of evaluation and peer review

All will need to be rebuilt.

If you are a researcher, student, editor, or educator, you are already part of this transformation.

The relevant question is no longer:

“Will AI change academia?”

But rather:

“Are you ready for how deeply it already has?”